Exploring the Advancements of GPT-4: From Image Processing to Test Taking

OpenAI’s latest language model, GPT-4, has officially been announced, but what can it do that its predecessors couldn’t? Here are some of the biggest features and use cases to emerge so far.

On Tuesday, OpenAI announced GPT-4, its next-generation AI language model. While the company has cautioned that differences between GPT-4 and its predecessors are “subtle” in casual conversation, the system still has plenty of new capabilities. It can process images for one, and OpenAI says it’s generally better at creative tasks and problem-solving.

Assessing these claims is tricky. AI models, in general, are extremely complex, and systems like GPT-4 are sprawling and multifunctional, with hidden and as-yet-unknown capabilities. Fact-checking is also a challenge. When GPT-4 confidently tells you it’s created a new chemical compound, for example, you won’t know if it’s true until you ask a few actual chemists. (Though this never stops certain bombastic claims going viral on Twitter.) As OpenAI states clearly in its technical report, GPT-4’s biggest limitation is that it “hallucinates” information (makes it up) and is often “confidently wrong in its predictions.”

These caveats aside, GPT-4 is definitely technically exciting and is already being integrated into big, mainstream products. So, to get a feel for what’s new, we’ve collected some examples of its feats and abilities from news outlets, Twitter, and OpenAI itself, as well as run our own tests. Here’s what we know:

It can process images alongside text

As mentioned above, this is the biggest practical difference between GPT-4 and its predecessors. The system is multimodal, meaning it can parse both images and text, whereas GPT-3.5 could only process text. This means GPT-4 can analyze the contents of an image and connect that information with a written question. (Though it can’t generate images like DALL-E, Midjourney, or Stable Diffusion can.)

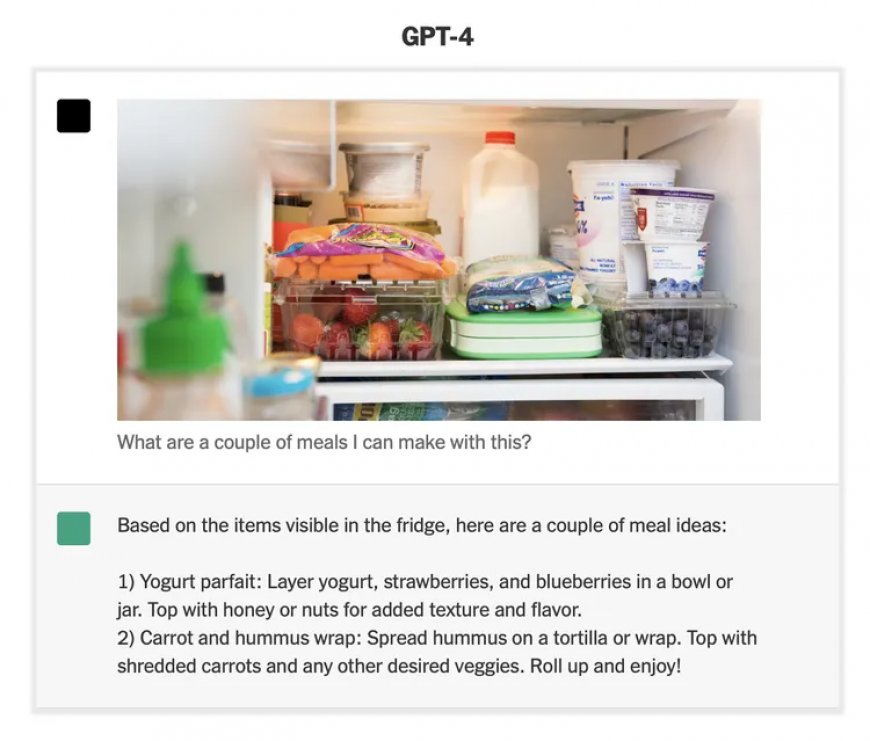

What does this mean in practice? The New York Times highlights one demo where GPT-4 is shown the interior of a fridge and asked what meals you can make with the ingredients. Sure enough, based on the image, GPT-4 comes up with a few examples, both savory and sweet. However, it’s worth noting that one of these suggestions — a wrap — requires an ingredient that doesn’t seem to be there: a tortilla.

There are lots of other applications for this functionality. In a demo streamed by OpenAI after the announcement, the company showed how GPT-4 can create the code for a website based on a hand-drawn sketch, for example (video embedded below). And OpenAI is also working with startup Be My Eyes, which uses object recognition or human volunteers to help people with vision problems, to improve the company’s app with GPT-4.

This sort of functionality isn’t entirely unique (plenty of apps offer basic object recognition, like Apple’s Magnifier app), but OpenAI claims GPT-4 can “generate the same level of context and understanding as a human volunteer” — explaining the world around the user, summarizing cluttered webpages, or answering questions about what it “sees.” The functionality isn’t yet live but “will be in the hands of users in weeks,” says the company.

Other firm have apparently been experimenting with GPT-4’s image recognition abilities as well. Jordan Singer, a founder at Diagram, tweeted that the company is working on adding the tech to its AI design assistant tools to add things like a chatbot that can comment on designs and a tool that can help generate designs.

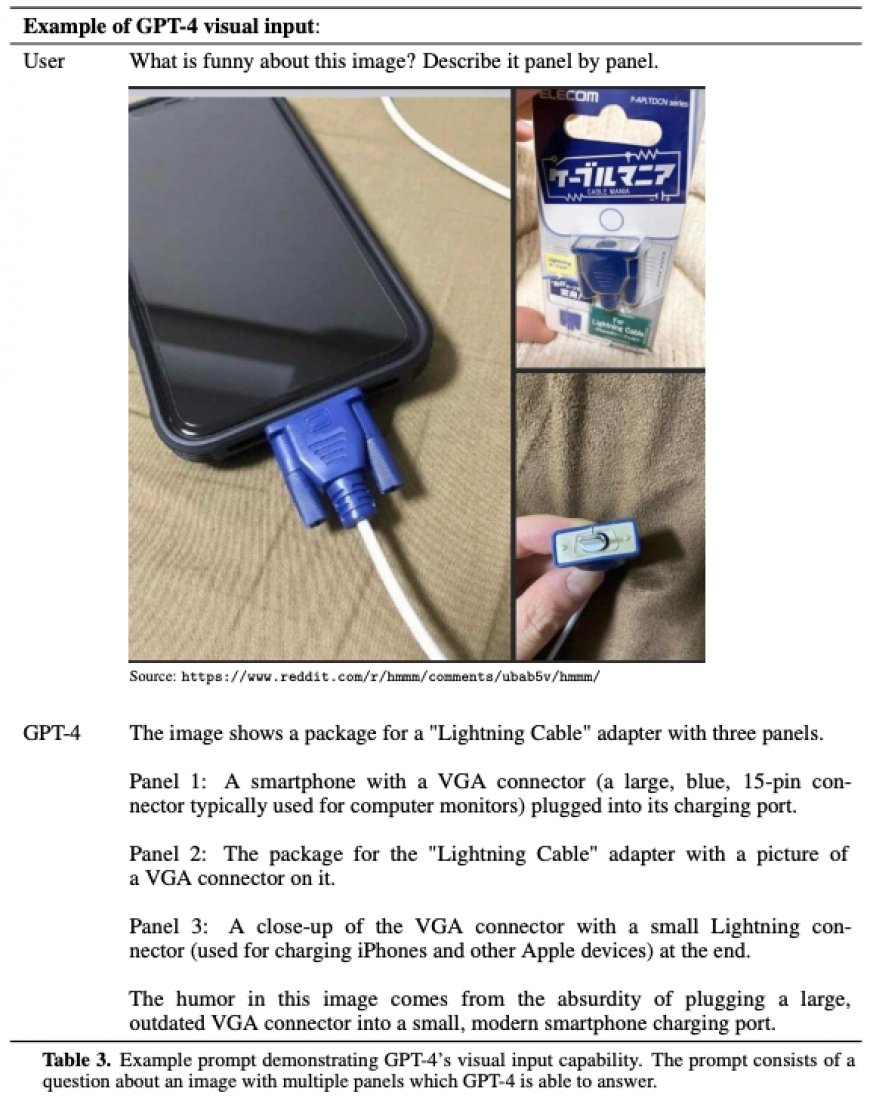

And, as demonstrated by the images below, GPT-4 can also explain funny images:

It’s better at playing with language

OpenAI says that GPT-4 is better at tasks that require creativity or advanced reasoning. It’s a hard claim to evaluate, but it seems right based on some tests we’ve seen and conducted (though the differences with its predecessors aren’t startling so far).

During a company demo of GPT-4, OpenAI co-founder Greg Brockman asked it to summarize a section of a blog post using only words that start with “g.” (He also later asked it to do the same but with “a” and “q.”) “We had a success for 4, but never really got there with 3.5,” said Brockman before starting the demo. In OpenAI’s video, GPT-4 responds with a reasonably understandable sentence with only one word not beginning with the letter “g” — and gets it completely right after Brockman asks it to correct itself. GPT-3, meanwhile, didn’t even seem to try to follow the prompt.

However, when we asked the two models to fix their mistakes, GPT-3.5 basically gave up, whereas GPT-4 produced an almost-perfect result. It still included “on,” but to be fair, we missed it when asking for a correction.

It can process more text

AI language models have always been limited by the amount of text they can keep in their short-term memory (that is: the text included in both a user’s question and the system’s answer). But OpenAI has drastically expanded these capabilities for GPT-4. The system can now process whole scientific papers and novellas in one go, allowing it to answer more complicated questions and connect more details in any given query.

It’s worth noting that GPT-4 doesn’t have a character or word count per se, but measures its input and output in a unit known as “tokens.” This tokenization process is pretty complicated, but what you need to know is that a token is equal to roughly four characters and that 75 words generally take up around 100 tokens.

The maximum number of tokens GPT-3.5-turbo can use in any given query is around 4,000, which translates into a little more than 3,000 words. GPT-4, by comparison, can process about 32,000 tokens, which, according to OpenAI, comes out at around 25,000 words. The company says it’s “still optimizing” for longer contexts, but the higher limit means that the model should unlock use cases that weren’t as easy to do before.

It can ace tests

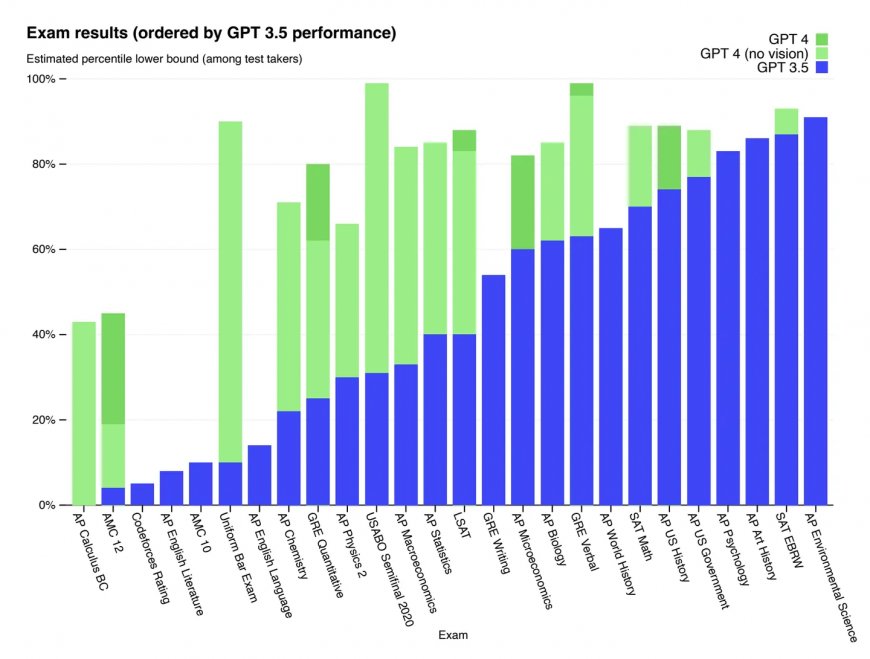

One of the stand-out metrics from OpenAI’s technical report on GPT-4 was its performance on a range of standardized tests, including BAR, LSAT, GRE, a number of AP modules, and — for some unknown but very funny reason — the Introductory, Certified, and Advanced Sommelier courses offered by the Court of Master Sommeliers (theory only).

You can see a comparison of GPT-4 and GPT-3’s results on some of these tests below. Note that GPT-4 is now pretty consistently acing various AP modules, but still struggles with those that require more creativity (i.e., English Language and English Literature exams).

It’s an impressive showing, especially compared to what past AI systems would have achieved, but understanding the achievement also requires a little context. I think engineer and writer Joshua Levy put it best on Twitter, describing the logical fallacy that many succumb to when looking at these results: “That software can pass a test designed for humans does not imply it has the same abilities as humans who pass the same test.”

Computer scientist Melanie Mitchell addressed this issue in greater length in a blog post discussing ChatGPT’s performance on various exams. As Mitchell points out, the capacity of AI systems to pass these tests relies on their ability to retain and reproduce specific types of structured knowledge. It doesn’t necessarily mean these systems can then generalize from this baseline. In other words: AI may be the ultimate example of teaching to the test.

It’s already being used in mainstream products

As part of its GPT-4 announcement, OpenAI shared several stories about organizations using the model. These include an AI tutor feature being developed by Kahn Academy that’s meant to help students with coursework and give teachers ideas for lessons, and an integration with Duolingo that promises a similar interactive learning experience.

Duolingo’s offering is called Duolingo Max and adds two new features. One will give a “simple explanation” about why your answer for an exercise was right or wrong and let you ask for other examples or clarification. The other is a “roleplay” mode that lets you practice using a language in different scenarios, like ordering coffee in French or making plans to go on a hike in Spanish. (Currently, those are the only two languages available for the feature.) The company says that GPT-4 makes it so “no two conversations will be exactly alike.”

Other companies are using GPT-4 in related domains. Intercom announced today it’s upgrading its customer support bot using the model, promising the system will connect to a business’s support docs to answer questions, while payment processor Stripe is using the system internally to answer employee questions based on its technical documentation.

It’s been powering the new Bing all along

After OpenAI’s announcement, Microsoft confirmed that the model helping power Bing’s chat experience is, in fact, GPT-4.

It’s not an earth-shattering revelation. Microsoft had already said it was using a “next-generation OpenAI large language model” but had shied away from naming it as GPT-4, but it’s good to know all the same and means we can use some of what we learned from interactions with Bing to think about GPT-4, too.

And on that note...

It still makes mistakes

Obviously, the Bing chat experience isn’t perfect. The bot tried to gaslight people, made silly mistakes, and asked our colleague Sean Hollister if he wanted to see furry porn. Some of this will be because of the way Microsoft implemented GPT-4, but these experiences give some idea of how chatbots built on these language models can make mistakes.

In fact, we’ve already seen GPT-4 make some flubs in its first tests. In The New York Times’ article, for example, the system is asked to explain how to pronounce common Spanish words... and gets almost every single one of them wrong. (I asked it how to pronounce “gringo,” though, and its explanation seemed to pass muster.)

NYTimes shares this guide to Spanish pronunciation as proof of GPT-4’s improvements ……. but virtually none of it is correct! pic.twitter.com/lpGgTSv1E8 — Christopher Grobe (@Confessant) March 14, 2023

This isn’t some huge gotcha, but a reminder of what everyone involved in creating and deploying GPT-4 and other language models already knows: they mess up. A lot. And any deployment, whether as a tutor or salesperson or coder, needs to come with a prominent warning saying as much.

OpenAI CEO Sam Altman discussed this in January when asked about the capabilities of the then-unannounced GPT-4: “People are begging to be disappointed and they will be. The hype is just like... We don’t have an actual AGI and that’s sort of what’s expected of us.”

Well, there’s no AGI yet, but a system that is more broadly capable than we’ve had before. Now we wait for the most important part: to see exactly how and where it will be used.